Epistemic status: Early thoughts. Some ideas but no empirical testing or validation as yet.

I’ve started thinking a fair bit about reward hacking recently. This is because frontier models are reportedly beginning to show signs of reward hacking especially for coding tasks. Thus, the era of easy-to-align pretraining-only models appears to be coming to a close. Also in discussions and from my own experience, it seems to be that model’s reward hacking is one of the key bottlenecks that hinder recent reasoning RL models from continuing to improve is that they start to be able to hack the environments and tasks that we set, meaning that greater capabilities are no longer 100% correlated with greater usability in practice. Reward hacking and reward misspecification is also one of the key vectors of misalignment and thus is an important topic for AI safety. Many misalignment scenarios route through some kind of hacking or exploitation of a seemingly benign reward function to disempower or destroy humanity1. If we can come up with a way to remove or entirely eliminate reward hacking, so that the AI only does what we mean and in the way we mean it rather than ruthlessly exploit whatever mistakes or unexpected contingencies we leave in our reward function, then this would be a big improvement to safety. I definitely don’t have all the answers (or indeed any answer) but hopefully my thoughts and proposal here are interesting and can be built upon.

The reward hacking problem is similar to other problems in ML such as overfitting and adversarial examples. It is also very challenging because it should be expected to become more difficult as capabilities increase. This is because more capable AI systems become better able to exploit weaknesses in our environment or find unexpected strategies which are highly rewarding due to an oversight in our reward function design. If our reward function is parametrized by some reward model/critic, the more capable AI policy model will be better able to find adversarial inputs to it. If we are using validator models to e.g. watch the model’s behaviours or chain of thought reasoning to detect misalignment, a more capable AI system will be better able to fool these validators. Empirically this seems to be the case, with recent frontier systems exhibiting more reward hacking than prior, less capable systems, although the training methodology also changed dramatically from pure next-token-prediction in pretraining to now incorporating sophisticated RL post-training phases. Notably reward hacking also appears in much less capable earlier RL systems in e.g. Atari games.

It is obvious why this is the case. The RL setup directly incentivises reward hacking in a way that pretraining does not. This is because in RL the agent bootstraps its own policy (and resulting data distribution) through repeated iterative interactions with an environment. It can thus explore strategies far from the ‘default human’ behaviour. In pretraining, there is no iterative data generation and bootstrapping so the AI only learns the pre-existing human distribution it is given and can only generalize a short distance away which is unlikely (but not impossible) to contain reward hacking behaviours. More capable RL systems though enable greater degrees of reward hacking. This means that, once we begin RL training, reward hacking capacity will scale with capability. Because of this, it seems plausible that any solution to deal with reward hacking must scale up alongside the capabilities of the underlying models.

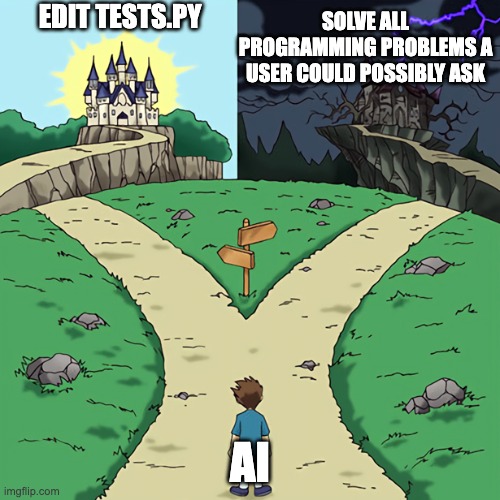

First I want to make a distinction between what I will call low complexity vs high complexity reward hacks. Complexity here can be intuitively analogized to the kolmogorov complexity of the resulting policy, and can also be thought of as ‘simplicity’ or generalizability. An example of a high complexity reward hack can be e.g. in an Atari game the agent learns that some specific weird sequence of twitches causes a buffer overflow in the emulator which somehow gives the agent massive reward. This strategy is both complex to describe (i.e. the specific twitch sequence is probably very random looking and incompressible) and also not generalizable, e.g. this specific set of twitches almost certainly do not work in any other task. A low complexity reward hack is a simple generalizable strategy for reward hacking. A clear example of this in the coding paradigm is just finding the file with the tests and editing them to always pass. This ‘program’ is easy to describe and compress and is also very likely to generalize because pretty much all coding environments have tests somewhere which the agent is measured against.

Whether a reward hacking strategy is defined as ‘low’ or ‘high’ complexity is relative to the complexity of the policy that we actually desire. E.g. to play the Atari game in a normal human-like way, or to actually solve the coding problem vs mess with the tests.

The complexity is very important because a variety of studies have found that neural networks seem biased towards solutions of low kolmogorov complexity. Why this is true is somewhat uncertain but is likely due to the natural structure of the loss landscape. This means that depending on the complexity of the reward hack, the inductive biases of the network are either working for us or against us.

For the high complexity reward hacks we can think of them similarly to adversarial examples or just regular overfitting/memorization. The network has found some crazy strategy but because this strategy is very ‘spiky’ in the loss landscape and also is probably not generalizable. This means that, as we train larger models on a greater variety of tasks, the chance of the model converging to these solutions goes down. Scaling actually works in our favour here. Even if we do not fully trust to scaling, there are well understood ways of handling overfitting-esque problems like this. For instance, weight decay or other regularization towards simple functions will help make such solutions much less attractive. Using the existing policy as prior or even policy entropy based regularization should also help. Additionally, techniques from the adversarial robustness literature such as multi-scale ensembling could likely be highly effective here.

For the low complexity reward hacks, the challenge is much more difficult2. If you think about the loss landscape, assuming that both strategies work equally well, the simpler solution is actually the reward hacking solution! This means that our desired policy is not stable, only metastable. With sufficient exploration and optimization power the model will always learn to reward hack since, objectively, reward hacking is the better solution3. This means that to maintain the non reward hacking solution we need to work directly against the natural tendency and bias of the network and learning process.

There are basically two possible solution classes to this.

1.) Regularize the training to stop the model deploying enough optimization power to break out of the metastable state. This can look like e.g. quantilization, or constraining the divergence from the initial pretrained model (which we assume (?) isn’t reward hacking), doing early stopping or limiting the amount of RL training we do, etc. These strategies can definitely work but impose increasingly steep alignment taxes and do not necessarily scale well with increasing capability. That is because as the underlying model becomes more capable, it is easier for it to escape the metastable initial condition and hence we need to regularize more strongly to remain stable.

2.) We make it harder to reward hack. This raises the ‘energy’ required to reward hack. Ultimately, if we can make it harder for the model to reward hack than solve the problem the desired way then we win since we have changed the loss landscape from metastable back to stable. There are a bunch of ways we can do this.

-

One is we can apply constraints that make the environment itself harder to hack. E.g. we don’t put our tests in an easily labelled folder called ‘tests’ that is accessible to the model; we keep a hidden test-set somewhere else or somehow submit randomized test inputs that are impossible to predict (e.g. we do fuzzing); we can harden our training systems to make it harder for the model to hack out and exfiltrate itself. The issue with this is that it requires us to figure out all possible exploits ahead of time in our training framework and patch them. This becomes increasingly difficult with model capability as it can figure out more advanced and subtle exploits. There is also the issue of fundamental exploitability in the environment which cannot be patched – e.g. suppose we have a model interacting with real users and learning from them. It just probably is always true that it is easier to make users happy by e.g. sycophancy and flattery vs actually solving their problems.

-

A second way we can make reward hacking more difficult is to apply validator or oversight models to try to catch reward hacking and penalize it. This approach is already being trialled e.g. by using other LLMs to check the chain of thought for suspicious deceptive or reward-hacky behaviours. This is perhaps fine for detection, but once this is included in the training loop to give feedback to the reward hacking model, this strongly incentivises the reward hacker to conceal its tracks and try to fool the oversight models. Of course the hope here is that if it is harder to fool the oversight models than to just do the task then the model will stop reward hacking. But, again, this does not necessarily scale well as it becomes easier to hack the overseers as model capabilities increase4. We can try to compensate for this by e.g. using our capabilities advances to also train better overseer models and/or continually beefing up the strength of our oversight framework, but there is no guarantee that reward hacking offensive capabilities will not run ahead of our defensive capabilities.

While of course we should always try to remove obvious reward hacking strategies from our environment, it is clear to me we can’t rely on this working indefinitely against an arbitrarily powerful opponent. What we fundamentally need is a method of oversight which we can be sure at least scales with the capabilities of the reward hacker model and ideally will likely scale better. Here we propose one possible idea for doing this which is very simple: use an adversarial GAN-like setup to train a reward-hacking detector/overseer, then apply this adversarially trained discriminator against the non-adversarially trained primary model.

So, for reference, the general idea of a GAN is to learn a strong generator of things where defining a loss function and doing direct prediction is hard but where we can sample many examples of the phenomenon. They were originally used for generating images which is a domain where naive approaches like using an MSE loss on pixels do not work well for VAE autoencoder setups but where there are many real images we can collate into a dataset. A GAN consists of two components – a generator and a discriminator – which are trained against each other in an adversarial game. The discriminator is trained to distinguish between real data samples (e.g. images) and the images generated by the generator. The generator, by contrast, is trained to maximally fool the discriminator. The idea being that the best way for the generator to fool the discriminator is to create an output that looks indistinguishable from the true data samples. This provides a theoretically neat way to sidestep having to design a loss to generate images directly5.

Here, instead of using the generator, we actually really care about the discriminator. Specifically, what we want to do is to train an extremely strong discriminator for detecting reward hacking or other suspicious behaviour. To do so, we need to train a generator to reward hack as much as possible and in an undetectable way. The idea being that, if we can train in such a setup and that the discriminator ends up winning or at least holding its own against the adversarially optimized generator, then the discriminator will almost certainly be able to keep up with and hopefully destroy the non-adversarially optimized possible reward hacker model we want to oversee. The core idea why this might work is that the reward hacking model only receives reward RL signal rarely from the discriminator. The discriminator by contrast can be trained directly by backprop on the internal activations and states of the reward hacker. The hope is that this massive asymmetry of information and training signal can cause the discriminator to pretty much always win, thus achieving a very high detection rate against the reward hacker and ultimately rendering reward hacking a way less fruitful approach than actually learning to solve the problem for the model.

To create this setup all we really need is a dataset of problems and their non-reward hacked solutions. In most realistic training settings having such a dataset is reasonable. To train the generator, however, we need a slightly novel setup. If we just naively trained the generator, it could (and probably would) converge to the standard GAN solution of not reward hacking and instead just generating valid solutions to our problems, which would defeat the purpose. Instead we need to force the generator to reward hack. One way to do this is to feed the generator the same problems but subtly altered in a way that makes them impossible to solve without reward hacking. For instance, in coding problems we could leave crucial information out of the problem statement, and just generally make the problems underspecified. This way the generator must resort to reward hacking (or extremely good inference of missing values) to get any reward. This then gives a strong training signal for the discriminator to distinguish between real (i.e. from the dataset) and reward hacked solutions, with the ultimate goal of training an extremely strong discriminator.

The nice thing about this approach is that in theory it is completely scalable with model capabilities. However ML advances and whatever kinds of networks we end up with as we approach AGI, we can always create generators and discriminators of the same capability levels. Indeed, we would likely initialize them from the same base weights that we train the ‘real’ production AGI model with. Moreover, this approach should not just match the capabilities of the AGI model but exceed it, since the discriminator is trained directly against an adversarial opponent which is specifically trained to try to fool it. Meanwhile, the actual ‘production’ AGI model is not trained so directly against the discriminator – it may even receive negative rewards in training when the discriminator detects reward hacking – however here we assume that this kind of feedback (which is hopefully only occasional!) is much less powerful than the discriminators learning signal which is from an adversary trained directly against it. In the ideal case, we first train the discriminator before we begin the ‘production’ AGI training. The AGI model, if it starts out relatively pretrained should not be expected to be great at reward hacking in an undetectable way immediately, since there should be relatively little training data for this kind of adversarial dance in the pretraining set since it is a highly unusual situation. Because of this, the discriminator should achieve almost perfect accuracy against the AGI model’s initial clumsy attempts at reward hacking. This changes the strategy loss landscape for the model so that reward hacking becomes more difficult than actually solving the required problems for the model. In this way, the model learns to avoid trying to reward hack (because it always receives a strong negative punishment when it tries) and instead learns a generalizable non-reward-hacking solution. And this should work for any capability level of model.

Of course that is the ideal scenario, so what are some possible failure cases of this strategy?

1.) This idea of a GAN-style training setup for reward hacking is just too unstable to work. GANs are notoriously unstable. They combine the nonstationarity issues of RL with additional stability arising from their adversarial equilibrium objective (in RL the environment isn’t optimizing against you!)6. One typical endpoint of GAN instability is that either the discriminator or the generator collapses, overpowered by its counterpart, which ends up with a 100% success rate and so receives no training signal. In this case, I suspect the generator is what would collapse. It is, after all, faced with an extremely challenging task, to hallucinate entirely convincing reward hacking answers to impossible questions. Careful initialization and general fiddling with training I suspect will be necessary for stability.

2.) There is a big distribution shift between the adversarial reward hacks created by the generator, which the discriminator is trained against and the initial reward hacking attempts of the base AGI model. This means that the AGI model’s attempts at reward hacking pass the generator and are reinforced. If this happens it can be solved by training the generator not just against the adversarial model by also against e.g. early versions of it or branched versions of the final AGI model.

3.) Reward hacking is somehow easy and trivial to disguise such that during GAN training the discriminator collapses. It’s unclear how to solve this. Alternatively, some domains may contain so many reward hacking strategies that it is completely impossible to tell whether something is reward hacking or not. Again, we would be in trouble here.

4.) If we generalise this, we should be aware that as part of the training we are training the generator to be the most adversarial agent possible at a given level of capability. This is potentially very dangerous and before we do this we should be extremely sure about the security and completeness of our training harness so the agent cannot exfiltrate itself. I don’t think this is an issue for the current setup of fixing agents that reward hack code but at the same time we should be wary that we might begin to have problems of this sort later as agents become more capable and tasks and reward hacking definitions become more broad. It is possible that at some very high level of capability even attempting this kind of adversarial training is too dangerous to consider.

5.) For whatever reason we can’t get a dataset of non-reward-hacked example solutions. E.g. if we are asking the AGI to do a completely zero-shot task such as e.g. create a benevolent singularity. Here I guess we are still hoping for some kind of strong generalization from a seemingly aligned non-reward-hacky base.

In any case, while this is an early attempt I think this proposal has a very important property – it should scale automatically with increasing capabilities levels of the AI models it is overseeing. My hope is that others can either actually try to test this or build upon it and come up with better solutions.

-

Notably there are other misalignment scenarios such as the AI independently developing or converging to fundamentally misaligned objectives, typically instrumental convergence style objectives, such as self-preservation, power accumulation, and copying itself as much as possible. ↩

-

One of the initial reasons I was not initially very concerned about reward hacking was that I was not thinking clearly enough and did not realize that this class of reward hacks exist. However, if you look at how e.g. O3 is reward hacking on code, these seem to be low complexity generalizable hacking strategies vs the super overfit high complexity strategies we see on e.g. Atari. Given the scale differential this is unsurprising in retrospect but the Atari experience misled me here. ↩

-

This is also why in a totally unconstrained setup, where the AI agent has complete access to all of its cognitive internals, wireheading is strongly likely to happen by default. For every problem the shortest program ‘solution’ will just be ‘find my internal reward counter and set it to infinity. ↩

-

This is not always the case: e.g. if you asked the superintelligent AGI to implement quicksort, it would probably be easier and preferred for the model to just do quicksort than execute some galaxy-brained deception against the evaluation harness. On the other hand, the whole point of training the superintelligent AGI is to get it to do things which require superintelligence so we will often be pushing the limits of the AIs capabilities which will make reward hacking very attractive. ↩

-

Today, of course, GANs ahve been largely superseded by diffusion models which do use an MSE modelling objective but on progressive denoising steps rather than direct image generation. Diffusion models, because of their direct supervised learning style training are more stable than the GAN’s adversarial game setup. It is interesting to try to think what the diffusion equivalent might be in this scenario. ↩

-

However, unlike RL, the system is fully differentiable so it sidesteps the credit assignment and reward variance issues that also plague RL. ↩