Yesterday I was rereading Richard Suttons Bitter Lesson, especially in light of the enormous recent success of hugely powerful language models (such as GPT3) and RL agents such as Alpha-go, MuZero, Alphastar, and Agent 57 which all broadly fit the pattern predicted in the post. We take existing algorithms and then throw very large amounts of computation at them and then performance improves dramatically and rapidly over the course of a few years.

Moreover, the promise is that due to Moore’s law, continued scaling will advance performance and capabilities without any additional algorithmic techniques being required. Sutton argues that previous waves of AI primarily focused on embedding human knowledge into agents which initially provided some improvements above the baseline but such methods were ultimately totally outclassed by techniques which leveraged large amounts of computation. For instance, chess agents with large numbers of handcrafted rules and heuristics eventually fell before the onslaught of tree-search and massive computation. A similar story has played out with Go where more heuristic and tuned agents were evetnaully defeated by reinforcement learning and simple value-function approximation methods combined with tree search. Starcraft, amazingly, fell to populations of RL agents competing with each other in a virtual tournament. State-of-the-art natural language processing methods now eschew any intrinsic knowledge of linguistics, treating language purely as a statistical problem to be tackled from scratch. Similarly, computer vision has moved from feature engineering, and learning complex hierarchies of SIFT features and HOGs, and Haar cascaeds to deep convolutional networks. Finally, the expert systems of the 80s, the ultimate endpoint of trying to encode human knowledge were incredibly fragile, brittle, and rapidly superceded by statistical methods.

In all cases, we have seen a victory for relatively general purpose statistical methods, combined with massive data and compute. While this progression of the field, and the lessons implicit in it, is unarguable, I think the general narrative painted above still raises a few very important questions and ignores nuance which is important if we want to understand better what is really happening. For instance, current machine learning methods surely also possess a huge amount of human knowledge embedded intrinsically into them. Although not explicit, huge amount of prior and empirical knowledge is contained within the network architecture, the datasets, the initialization techniques, the training mechanism, the activation functions, the loss functions, and so forth. All this knowledge hs been slowly and painstakingly empirically discovered and crystallised over several decades of research in total and especially due to the huge recent investments in ML techniques in the last decade.

Indeed, it is possible to argue that most of the advancements in AI have not arisen directly from increasing compute but rather the invention of new kinds of structure which can utilize increased computing power. No matter how much compute you throw at it (at the moment), you cannot classify Imagenet with only a structure-less multilayer perceptron of the kind generally used in the 90s. Similarly, basic RNNs fail miserably compared to transformers at language modelling even with substantially greater compute and data, and are not vastly better than the previous sophisticated N-gram models. Moreover, recent research has shown consistently declining compute using newer methods required to reach the AlexNet level of performance on Imagenet. This is definitely progress but it is hard to understand within Sutton’s framework. Is all this work just the same kind of tinkering and encoding knowledge that will be swept away by the next compute-heavy loads? Similarly, even in tree-search for playing games such as chess, the examples Sutton gives of compute-heavy methods, actual SOTA implementations do not simply perform naive breadth, or depth-first search but rather contain all manner of heuristics, exploration techniques, and learnt value functions. These surely encode ‘human knowledge’ about the kind of problems tree-search is applied to.

Of course Sutton would argue that this is completely in line with his thesis as these improvements are examples of improvements in search or learning. However, it is important to note that these improvements come directly from us as algorithm designers directly imposing additional structure on the problem to make it solvable. Simply the idea of adding structure and knowledge to help solve a task is not necessarily the problem – this is too simplistic an analysis. The key is that it must be the right kind of structure.

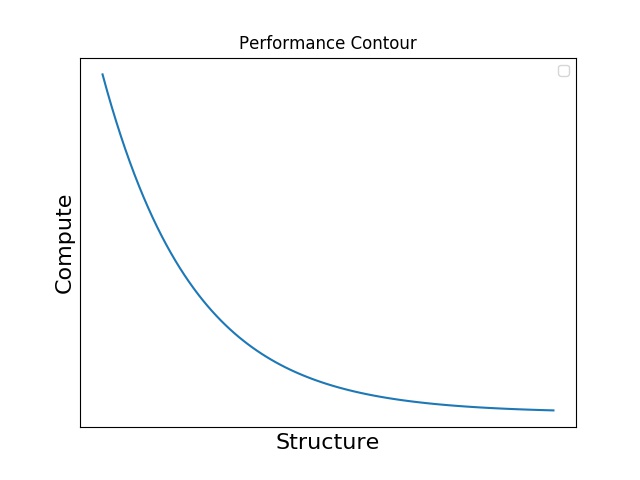

Let’s take a step back and look at this in more detail. We propose a simple model where the performance of an AI algorithm depends on two quantitites – ‘compute’ and ‘structure’, where compute is all the computationsl resources used by the algorithm, which includes datasets, while structure is the actual algorithm istself and all the human knowledge encoded into the algorithm implicitly or explicitly. For a given level of performance, then we can plot the amount of compute vs structure required that level of performance. I hypothesise (without very strong evidence – this is a ‘spherical cow’ model) that the relationship will be approximately a negative exponential, as plotted below:

Asymptotically, we know that any function and thus any task can be learnt with a minimum of structure by a 2-layer MLP with infinite width. This infinite width is an issue since it requires infinite computation, so in effect we have that any function can be learnt with infinite computation. This heavily suggests, moreover, that the approach to the infinite computation limit will be asymptotically exponential. By contrast, one could also model the same function by creating an explicit lookup table for the optimal label or action directly from every possible data element. This requires very little computation (obviously a huge amount of memory) but is perhaps the maximal amount of ‘structure’ that can be encoded into the problem. Moreover, due to the exponential increase in volume with dimension we know that the approach to this asymptotic limit is exponential. The key then is to reach the desired performance level with a minimum of compute and structure. Graphically this corresponds to finding the bend of the exponential curve where it is closest to the origin.

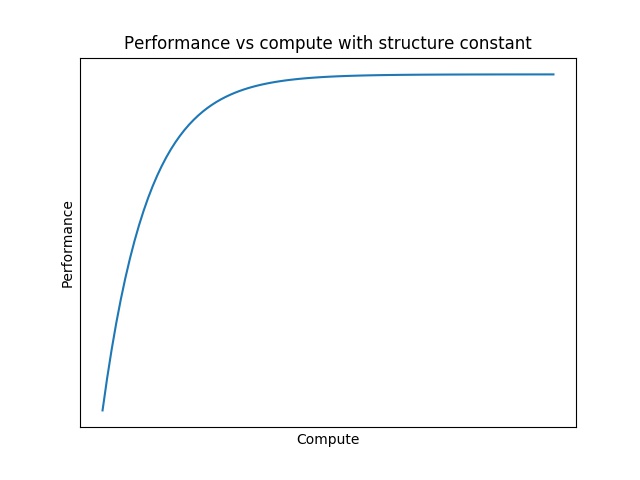

Moreover, we can also think about the effects of holding either computation or structure constant and varying the other while plotting the effects on performance. Again I suspect that these will be asymptoting exponential curves as below,

Again I lack hard evidence for this, but the shape of this curve derives directly from the common microeconomic assumption of diminishing marginal benefit, which is a good general rule to have as a prior. Although structure is hard to explicitly measure and vary, compute is relatively easy and so there is some evidence that we observe exactly this declining marginal benefit of computation on performance for very large AI models. For instance this paper from OpenAI shows exponentially decreasing performance gains from increasing compute and dataset size for extremely large GPT-3 style transformer models – i.e. the size of the dataset and amount of compute must be scaled up by an order of magnitude for a linear decrease in loss (this analysis is complicated by the fact that we don’t really know how an absolute value of some metric like the log-loss is actually related to quantities we actually care about, like the qualitative ‘translation ability’, and whether this relation is constant, or has some complex functional form.).

Given this, Sutton’s bitter lesson needs some nuancing. It is generally not pure computation which boots performance over the long run (it can be for a given model or perhaps even model class or ‘wave’ of research, but in the long run computation alone provides diminishing marginal gains). Rather it is the the marriage of computation and structure. The magic happens when vast computation is leveraged and applied to the right kind of structure. Sutton agrees with this implicitly. He says that research should focus on ‘learning’ and search. However the key point is that learning and search fundamentally requires human built (at least initially) struture to work. Indeed, without structure, the optimal learning and search algorithms are already known. Search is just a combinatorial search which is intrinsically exponential, while the optimal learning algorithms if exact Bayesian inference. The trouble is that both scale exponentialy with number of dimensions. Advances in these fields primarily come from working on the correct approximations, priors, and heuristics to make them work more effectively by cutting down the exponential space into a smaller and managable space which can be dealt with tractably. But ultimately this requires implicitly or explictly encoding human derived structure, or priors. The real question, then, and the core idea behind Sutton’s argument is not against structure and human input per se, but what is the best or most useful type of structure to enode. A key criterion here is that of scalability. Given a level of structure we can see the scalability of that level is the shape of the performance/compute curve where the slower the asymptote the more scalable is the method. We can also measure local scalability as the derivative \(\frac{dP}{dC} \Vert_s\) which might be something useful to measure to understand more about the methods. Likely the reason that the deep networks succeeded so greatly in the 2010s and not in the 90s is not entirely due to computational constraints (for instance compute AI has been increasing exponentially (much faster than moore’s law due to well-capitalised actors such as big tech companies and AI startups) but the initial results such as AlexNet were achieved with relatively modest computational investments. If a google-sized player in the 90s or early-mod 2000s had had the knowledge of the structure embedded with AlexNet, the computional cost would have been well within reach), but rather due to us not having figured out the correct kinds of scalable structure to impose upon our neural networks. CNNs, LSTMs,transformers, ResNets, attention, initialization schedules, SGD, and a whole bunch of empirical tweaking has been the primary driver of continued success not simply more compute. Once we had these innovations and scalable types of structure, then compute could be productively applied and the result was a hardware overhang and comcomitant explosion of progress in the past decade. This hardware overhang has been exploited extremely well by well capitalised companies such as OpenAI and DeepMind which have been posting incredibly breakthrows, and given that the exponential compute growh of AI is currently still going on, we can expect similar large breakthroughs at least for the next couple of years until we reach the asymptoting part of the sigmoid curve.

From this, a couple of questions and predictions directly emerge. First, as AI research has been currently exploiting the hardware overhang, we should generally expect progress to slow and become more algorithmic and less compute-driven over time, as compute will primarily be increasing due to moore’s law (which is itself slowing) rather than just scaling up existing capacity. ii.) SOTA compute heavy results will require vast resources of well funded corporations and so, as has been observed, groundbreaking practical results primarily come from that quarter, and this will become increasingly so. Academia will focus more on structure adding, and a lot of this will fall foul of Sutton’s argument about continually adding non-scalable structure, but some of it will be scalable structure and it will advance the field. Generally, progress will continue to happen by inventing new kinds of scalable structure and then leveraging massive computation to put it to the limits of performance.

So, as the key appears to be the discovery of scalable structure, is there any way to tell a priori whether a given type or instance of structure will scale well or not? It would be very surprising if there is a foolproof rule for this but empirical experience has given rise to several heuristics: 1.) Explicit rules and heuristics which directly encode human knowledge are unlikely to succeed. This is largely what Sutton is arguing against. This fails because it cannot scale with compute as directly encoded rules only requires a constant amount of compute. Also real domains are messy and humans cannot hand-craft rules for every possible contingency. 2.) Structure that exploits or enforces invariants generally works very well, and is probably necessary for effective learning. The power of CNNs is that they enforce translation invariance, for instance. Similarly, LSTMs through their memory gating mechanisms enforce time-warping invariance. Similarly the successes of data augmentation methods can be seen as enforcing invariances through the data instead of the architecture. 3.) In search, structure primarily comes from methods and heuristics for pruning away unlikely regions of the state-space. 4.) Flexible yet constraining approximations that maximally reduce degrees-of-freedom of the search space. This can arise through invariances, or else knowing or coming up with good extrapolation methods to assume that the space is not worth exploring further with a minimum of sampling required.

In general since the full search or learning space is exponential, the purpose of structure is to cut down this exponential space to a more tractable one which is ideally polynomial or below. Invariances are a powerful way to do this as they ultimately eliminate entire dimensions which are redundant. Other or approximation methods try to cut down this exponential space in various ways principally by ignoring large regions where it is assumed or inferred that the answer does not lie. For an extreme example, a mean-field variational approximation of N dimensions cuts down the exponential combinatorial dimensions of all combinations of the dimensions to a space linear in N by considering each dimension independently. Importantly these decisions of what structure to enforce, and thus what regions of the combinatorial space to ignore must be impliclty or explicitly be coded into the algorithm. The key to progress is ultimately thus finding the right kinds of structure that is scalable with computation but which ultimately and thus threads the happy medium between the exponential combinatorial blowup if the problem is too underspecified, and essentially cutting away too much of the solution space leading to nonscalable, fragile, and brittle solutions.